Seekr: Deploying Trusted AI in Intel® Developer Cloud

by Harmen Van der Linde

Senior Director Product Management, Intel® Developer Cloud

The full version of this blog was first authored and published by Intel.com on April 9th, 2024.

About Intel Developer Cloud

Since its general release last September, Intel® Developer Cloud has become a widely-used platform for evaluating new Intel® hardware and deploying AI workloads. It is based on a scale-out infrastructure and open software foundation including oneAPI, to support benchmarking workloads for evaluation and large-scale multi-node AI workload deployments required for AI model training. Seekr, a fast-growing artificial intelligence startup specializing in reliable content evaluation and generation, uses Intel Developer Cloud for LLM model training at scale.

During the past months, Intel Developer Cloud’s compute capacity for enterprise customers steadily increased with additional U.S. regions, new state of the art hardware platforms, and new service capabilities. Our bare metal as a service (BMaaS) offering now includes large-scale Intel® Gaudi® 2 clusters (128 node), Intel® Max Series GPU 1100/1550, virtual machines (VMs) running on Gaudi 2 AI accelerators, and Intel® Kubernetes Service for cloud-native AI/ML training and inference workloads. Providing access to these multiarchitecture systems on the latest hardware is truly unique—today, no other cloud service provider provides this.

Cost-efficient Services, Hardware Access for AI Startups

With the explosive growth of AI and ever-increasing demand for AI computing, Intel Developer Cloud is an attractive deployment environment for AI startups to access industry-leading Intel CPUs, GPUs and Gaudi 2 accelerators. It is being used for internal development and support of AI production services for customers. Seekr is a prime examples of an AI startup using Intel Developer Cloud to offer services to their customers. In collaboration with Intel’s award-winning Ignite and Liftoff incubation programs, Intel’s AI cloud provides a kickstart for startups to quickly use AI compute without the hassle of setting up their own infrastructures, and at lower costs than other options.

An AI Supercomputer in the Making

With the increasing popularity of LLMs, computing requirements to train and optimize these models have rapidly increased, becoming a chokepoint for many AI companies’ quick development and deployment of commercially-viable GenAI solutions.

Intel Developer Cloud introduced a single host and clustered-deployment systems of industry-leading Intel Gaudi 2 AI accelerators. Clustered Gaudi 2 systems are interconnected in a full mesh with high-speed networking managed by a software control plane to manage and scale out cluster configurations automatically. Our unique software-enabled compute infrastructure can seamlessly expand generative AI/LLM deployments using large Gaudi 2 compute clusters, powering large-scale LLMs and multimodal models.

Additionally, Intel Kubernetes Service is now available as a runtime environment for distributed AI container workloads, accommodating large AI deployments on scale-out clusters.

Trusted AI Challenges

Enterprise marketers, advertisers, and decision-makers rely on content marketing strategies to reach their audiences and are constantly challenged in their quest to do so in a manner that builds contextual relevance, trust, and credibility. Today, consumers of online content suffer from information overload, misinformation, hallucinations, and inappropriate content, eroding the trust that enterprise marketers seek. Even more importantly, it puts company reputation at risk by being associated with content or platforms that host at-risk content.

Seekr: Trustworthy AI for Content Evaluation and Generation at Reduced Costs

Seekr unlocks productivity and fuels innovation for businesses of all sizes through responsible and explainable AI solutions—including AI infrastructure with foundation models, and industry-agnostic products in content scoring and generative AI.

One unique use case is brand safety and suitability, where Seekr uses its content acquisition and patented content scoring and analysis to help brands and publishers expand their reach to larger audiences, while confidently aligning with brand-suitable content. Seekr’s real-time insight into content quality and subjectivity gives businesses the confidence that their content is reaching the intended audience during the right moments, growing brand reach and revenue responsibly.

After a successful deployment and benchmarking study, the company migrated its on-prem AI service platform deployment into Intel Developer Cloud to help scale its growing business through Intel’s superior price and performance.

“This strategic collaboration with Intel allows Seekr to build foundation models at the best price and performance using a super-computer of 1000s of the latest Intel Gaudi chips, all backed by high bandwidth interconnectivity. Seekr’s trustworthy AI products combined with the ‘AI first’ Intel Developer Cloud reduces errors and bias, so organizations of all sizes can access reliable LLMs and foundation models to unlock productivity and fuel innovation, running on trusted hardware.”

Rob Clark, Seekr President & CTO

SeekrAlign™: Contextual AI for the Advertising Industry

An issue facing brands and ad agencies today is finding brand-safe advertising opportunities in a media landscape that is increasingly complex and difficult to navigate. For example, in podcasting, first-gen audio tools created for this task were overcomplicated and unreliable—prone to false negatives and false positives, allowing keywords to trigger risk content ratings. SeekrAlign, an advanced brand safety and suitability analytics solution, is built on an LLM combining proprietary content evaluation and scoring capabilities to provide unprecedented visibility into thousands of podcasts—aligned with established standards in the content and advertising industry.

SeekrAlign simplifies finding safe and suitable podcasts by marrying world-class engineering with innovative and transparent ratings, allowing brands to navigate with nuance so they can confidently grow their audio campaign with increased clarity and speed. It goes above and beyond the Global Alliance for Responsible Media (GARM) brand safety and suitability floor and enables brands to safely reach a much bigger audience.

A unique feature of the SeekrAlign product is its patented Civility Score™, which tracks personal attacks, including those that may not register as hate speech under GARM, but are still hostile in nature. It allows the end user to overlay desired risk filters, including GARM, giving brands unparalleled control and transparency in the podcast industry. SeekrAlign has already evaluated more than 20 million podcast minutes and applied scoring, and this will increase 5x by the end of the year, far outpacing competitive offerings. Seekr has a growing list of leading brands using Align, including Babbel, Equativ, Indeed, MasterClass, SimpliSafe, and Tommy John, among others.

“Seekr’s AI-driven content analysis platform goes beyond anything we’ve seen available on the market. It helps us simplify our process of finding brand- podcasts we feel comfortable advertising with and are confident will help distribute SimpliSafe’s messaging responsibly to the right target audience.”

Nicholas Giorgio, Director, Customer Acquisition at SimpliSafe

Bring clarity to your campaigns

Learn HowSeekrAlign Deployment in Intel Developer Cloud

Seekr has been using a regional colo provider to host a server fleet of GPU- and CPU-enabled systems. The self-managed compute infrastructure is used for AI LLM model development and supports SeekrAlign. With customer growth and increasing size of AI LLM model deployment, Seekr sought a cloud services provider to help scale its businesses through superior price and performance.

After successful fine-tuning and inference benchmarking of Seekr LLM models on Intel Gaudi 2 accelerators and Xeon processors, Seekr decided to leverage Intel Developer Cloud as its preferred computing infrastructure for LLM model development and production deployment support.

Seekr plans a phased deployment to migrate the company’s solution platform into Intel Developer Cloud. It is using various AI-optimized compute instances in our cloud to optimize the deployment cost/performance of application workloads, including:

- Intel Gaudi 2 accelerators—The 7B LLM model that powers the SeekrAlign solution is deployed on a cluster of Gaudi 2 systems to perform sensitive content categorization and risk assessments.

- Intel Data Center GPU Max Series—Seekr uses PyTorch* for transcription and diarization processing on a cluster of Intel GPU-powered systems.

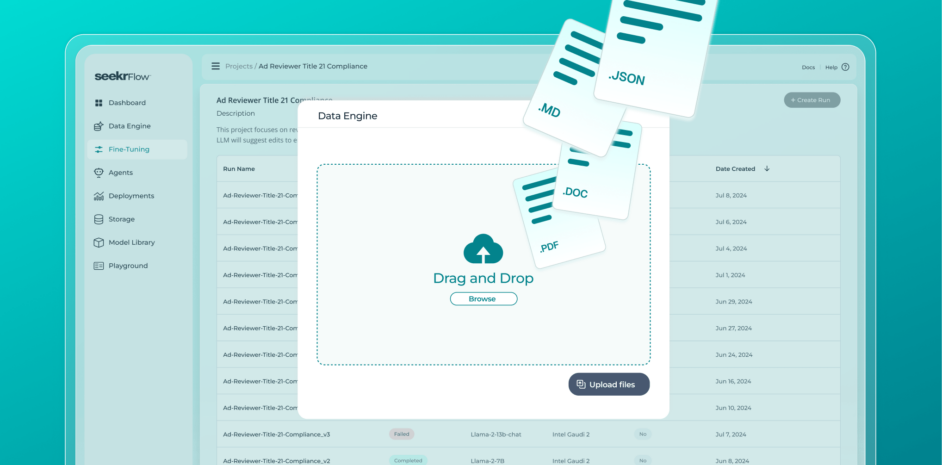

- Intel® Xeon® Scalable processors—The company uses a cluster of 4th gen Xeon CPU-enabled systems for application monitoring, storage management, and vector and inverted-index database services. These support Seekr’s Retrieval Augmented Generation services, and AI-as-a-service platform SeekrFlow™, an end-to-end LLM development toolset focused on building principle aligned LLMs using scalable and composable pipelines. Plans include utilizing 5th gen Intel® Xeon® Scalable processors later this year.

“The Seekr-Intel collaboration unlocks the capabilities of running AI on hybrid compute. Intel GPUs and Gaudi 2 accelerators are leveraged to both train and serve at scale, large and medium size transformers. We saw improved performance both for model training and inference, when compared to other chips in the market.”

Stefanos Poulis, PhD., Seekr Chief of AI Research & Development

As part of the following Intel Developer Cloud integration phase, Seekr is planning to leverage large-scale Gaudi 2 based clusters with our Kubernetes Service to train its LLM foundation model and add CPU and GPU compute capacity to grow its trustworthy AI service offering. For more details and insight on benchmarks comparing their on-prem system with Intel Developer Cloud, see this case study: How Seekr is Building Trustworthy LLMs at Scale in Intel® Developer Cloud.