Back to blogs

Understanding AI Agents: The Next Step in Enterprise Transformation

AI agents mark a transformative shift in enterprise AI. No longer just passive tools, they’re emerging as a new layer of infrastructure capable of reasoning, acting, and learning in context.

As we move beyond copilots and into agentic systems, the question is no longer “What can AI say?” but “What can AI do—and can we trust it to do it well?” Embracing this new paradigm will require enterprises to rethink how they design, deploy, and govern intelligent systems.

Key takeaways

- 2025 has been coined “the year of AI agents”. Enterprises are racing to understand and adopt these new technologies, and from customer support to IT and sales, agents are already helping speed up decision-making and connect insights across systems.

- But what are AI agents, exactly? Think of them as coworkers, assistants, or autonomous apps. They can plan, reason, and take action toward defined goals with minimal human oversight.

- With more autonomy comes more risk. Empowering agents to make decisions you can trust requires the highest levels of transparency and explainability.

- To get started, identify a high-value, low-risk use case. Define how much autonomy makes sense. Then, align the agent with your enterprise data and internal governance frameworks to build trust and drive ROI.

The time for AI agents is now

As enterprises move from AI experimentation to optimization, AI agents are the next phase of innovation. According to Gartner, by 2028, 33% of enterprise software will include agentic AI, and 15% of work-related decisions will be made autonomously.

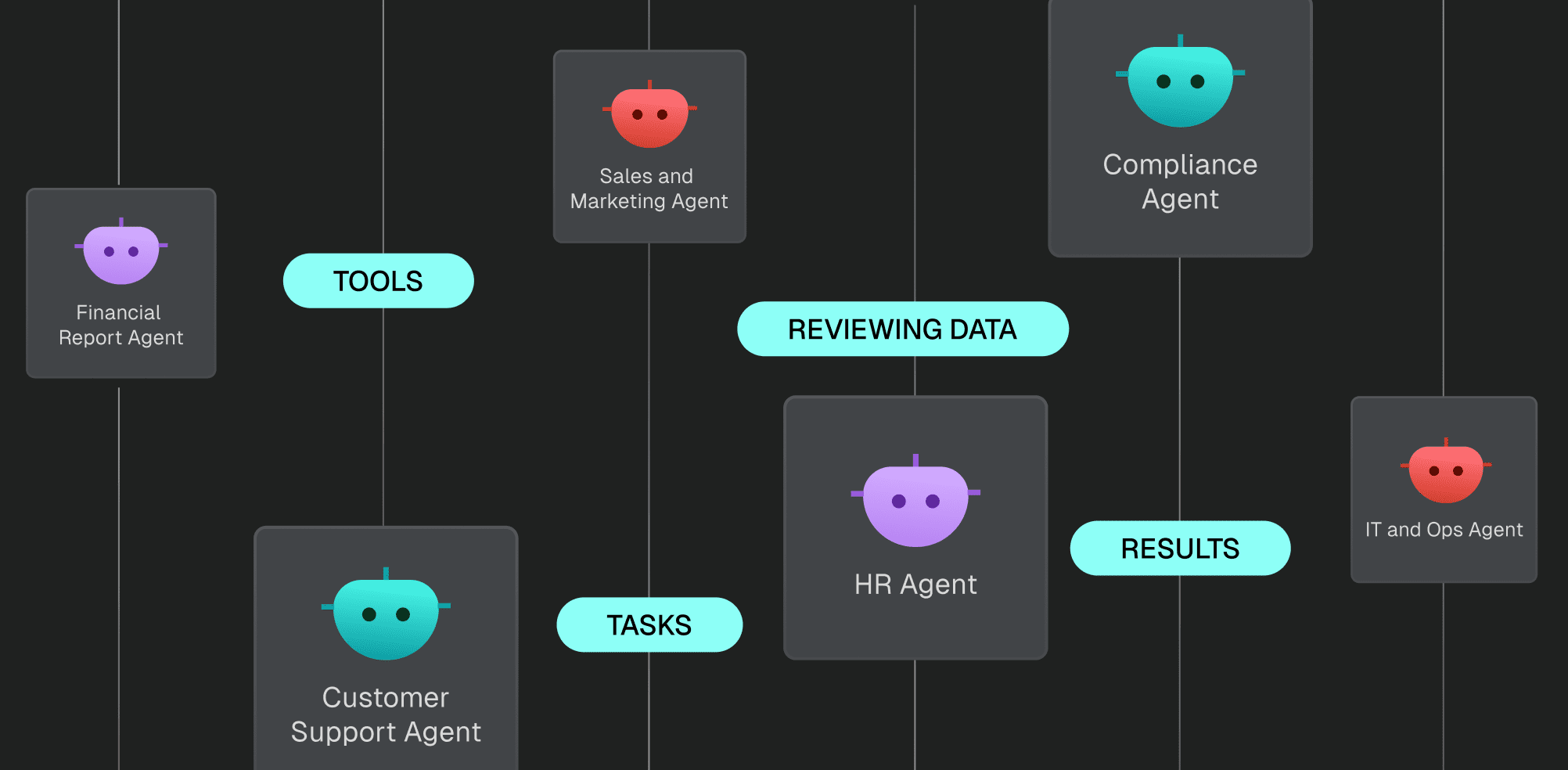

More than a chatbot or tool, agents can reason, plan, act, and collaborate with purpose. They can operate across systems, coordinate tasks, and continuously learn to improve outcomes to drive greater value over time.

As we move deeper into 2025, agents transition from experimental to essential infrastructure. Successful enterprises will balance ambition with prudence—leveraging autonomous capabilities while maintaining oversight and governance necessary for building trust and unlocking new levels of growth.

Transforming the way we work

Today’s enterprises are overwhelmed with fragmented data, disconnected systems, and manual workflows. AI agents help unify and streamline work by:

- Synthesizing data across tools to reduce search and response times

- Automating multi-step tasks across departments

- Assisting employees with real-time, context-aware support

- Reducing manual handoffs and repetitive work

- Enabling secure, auditable actions across workflows

These capabilities can unlock both time and strategic focus for your team. Instead of replacing people, agents empower them to accomplish more, faster, so they can dedicate more time and energy to their expertise.

To unlock this value, though, you must first understand what AI agents actually are and how they differ from other AI systems.

What are AI agents?

Where traditional AI models typically provide one-time outputs in response to prompts, agents are goal-oriented systems. They don’t just answer questions—they complete jobs.

Core components of an AI agent include:

- A reasoning engine to plan and execute multi-step processes

- Contextual memory to retain facts, past actions, and constraints

- Access to tools or APIs to interact with external systems

- A goal-driven architecture that guides task selection and execution

- Autonomy to define and adjust the best path to an outcome

For example, you might ask an agent: “Generate a Q2 business review presentation for the executive team.”

The agent would break this into subtasks like retrieving data, analyzing KPIs, identifying trends, and drafting slides, continuing to refine until the output meets the intended goal.

Leading enterprise approaches are treating agents as configurable systems, not just prebuilt assistants. This includes modular components for planning, tool calling, memory, and observability—all operating within secured infrastructure.

Agents are characterized by:

Environment: This is defined by the use case; e.g., a CRM system for sales analytics, or a corporate knowledge base for employee onboarding.

Available toolkit: The agent’s capabilities expand based on the specific tools and functions it can access.

Tools are what allow agents to interact with the outside world. They fall into three categories:

- Knowledge augmentation: Context construction, internet search, vector search, SQL queries

- Capability extension: Calculators, code interpreters, or computer-using agents that interact with digital environments like a human

- Action-taking: Chatbots that can perform tasks (resetting passwords, processing refunds); autonomous vehicles. These can set their own goals and interact with the physical world, which makes them the most powerful (and potentially most dangerous) of these categories.

What sets agents apart is their ability to reflect, course-correct, and adapt over time. They support work that would otherwise require significant human input or cross-functional coordination.

Agentic workflows vs. AI agents: Unpacking the difference

As you evaluate how to apply agentic systems, it’s important to distinguish between agentic workflows and AI agents. Both involve AI taking action, but the degree of autonomy and complexity is different.

Understanding this distinction will help you determine the right approach for your organization’s needs, risk tolerance, and infrastructure maturity.

Agentic workflows: Predictable and structured

Agentic workflows use language models in a sequence of clearly defined steps. These systems support task automation and decision-making, but they follow a predetermined path and can’t deviate from it.

For example, you might prompt a system: “Summarize this week’s customer support tickets.”

The agentic workflow retrieves data, categorizes issues, and generates a summary in a fixed sequence, with human review before final output.

Agentic workflows are:

- Deterministic: You define the steps and order in advance. The system follows a clear path.

- Human-in-the-loop: Humans verify outputs and make the final decision

- Ideal for: Structured environments with a clear need for oversight and traceability

AI agents: Autonomous and goal-driven

Where agentic workflows can’t stray from the planned sequence, agents operate with significantly more freedom. They are more like skilled consultants that are capable of assessing situations, choosing an approach, and adapting their strategy based on what they discover along the way.

For example, an AI agent may receive the goal: “Resolve this customer ticket effectively.”

The agent then determines its own course of action: analyzing the ticket, deciding whether additional customer data is needed, selecting an appropriate response strategy, evaluating the need for escalation, and adjusting based on the customer’s responses.

AI agents are:

- Non-deterministic: The agent defines its own path to success, including what tasks to take on and in what order.

- Autonomous: The agent reasons about how best to proceed and reflects on its progress, refining its actions based on feedback or failure.

- Dynamic task planning: Rather than executing a static workflow, the agent continually evaluates what needs to happen next.

- Ideal for: Complex, cross-functional work that requires flexibility and initiative

This flexibility makes AI agents powerful, but also introduces new requirements for trust, explainability, and governance—especially in high-stakes or regulated environments.

Key difference: Agentic workflows ask, “How do we automate this process?” Agents ask, “How do we achieve this outcome?”

Where to start: Practical enterprise use cases

The success of an AI agent hinges on its ability to operate autonomously while maintaining high standards of accuracy and efficiency based on your unique requirements.

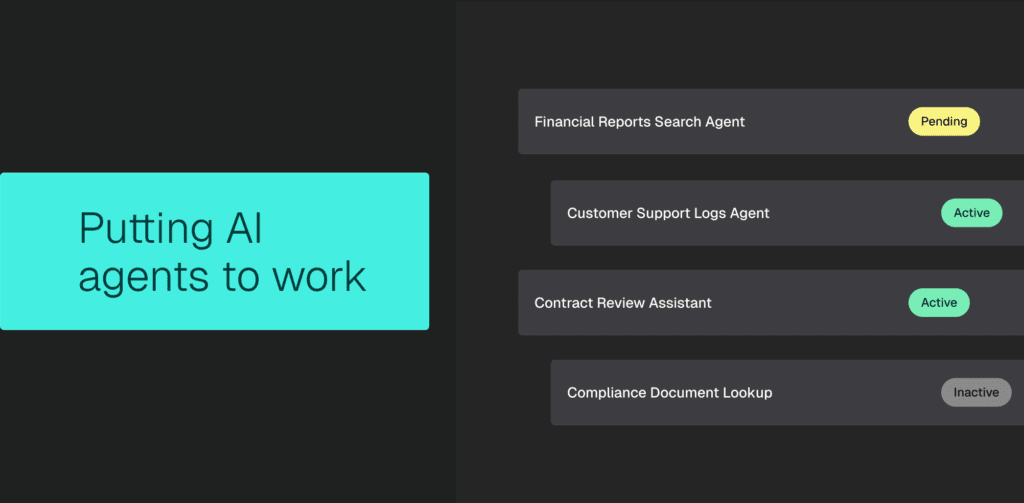

Organizations are starting with practical, scoped use cases where agents reduce complexity or augment capacity.

Customer support: Drafting context-aware responses, surfacing past case data, or automating resolutions

IT and operations: Running diagnostic steps, monitoring processes, or coordinating multi-system tasks

Sales and marketing: Personalizing outreach, preparing reports, and enriching CRM data automatically

HR and internal knowledge: Answering common employee questions, guiding onboarding, or helping staff navigate internal policies

Compliance: Tracking policy adherence, documenting decisions, or flagging process anomalies in real time

How to deploy agents you can trust

Integrating AI agents into enterprise environments requires more than plugging in a tool. It’s a strategic decision that touches infrastructure, workflows, and governance.

While the opportunity to automate with agents is significant, so are the risks. Finding the right balance means building systems that are not only capable and adaptive, but also explainable, secure, and aligned with your organization’s standards. These six principles can help:

1. Start with well-defined, measurable use cases

Begin in areas where agents can deliver immediate, measurable value while minimizing risk—like automating routine data analysis, handling standard customer queries that follow predictable patterns, or generating internal reports.

2. Match autonomy to risk

Not every agent needs full independence. Set clear rules for when an agent can act, when it must ask, and when it simply suggests.

For example, you might set constraints that require the agent to use a specific tool like FileSearch when generating responses to ensure it relies on trusted internal data sources. You could also require the agent to ask for user permission before searching external sources, like public web content or tools outside the organization’s vector store.

3. Build in explainability

Enterprise agents must show their work: what tools they used, what data they retrieved, and why they chose a given path. This transparency is what transforms AI from black-box helper to trusted system.

4. Keep humans in the loop

From approvals to post-hoc review, human involvement reinforces trust, ensures safety, and supports learning over time.

5. Think platform, not point solution

Don’t treat AI agents as one-off tools. Instead, build and deploy on a platform that can support multiple use cases, share learnings across implementations, and evolve governance policies over time. This gives you more control, easier scaling, and greater long-term value across your organization.

6. Measure and iterate

Establish clear success metrics on day one. Track performance and user adoption to understand what works, what doesn’t, and where to go from there.

You can’t build the future with borrowed parts. As enterprises adopt agents, stitching together tools and APIs won’t be enough. What’s needed is intentional design, integrated systems, and the trust that comes from knowing how the machine thinks.

Start preparing for an agentic future now

AI agents are redefining how humans and machines collaborate. When designed with transparency and embedded in the right systems, agents can become trusted teammates: taking care of repetitive tasks, simplifying complexity, and unlocking time for more strategic work.

At Seekr, we’ve spent the past year building a platform grounded in these principles: trust, transparency, and real-world readiness. In the coming weeks, we’ll share how we’re approaching enterprise agents—not just as assistants, but as infrastructure.

If you’re thinking about where agents might fit in your business, now’s the time to start laying out the right foundations. Connect with a product expert to explore how agents can take you to the next step in your AI transformation.

Accelerate your path to AI impact

Book a consultation with an AI expert. We’re here to help you speed up your time to AI ROI.

Request a demo