Back to blogs

How Human + Machine Teaming Can Rewire Navy Decision Making

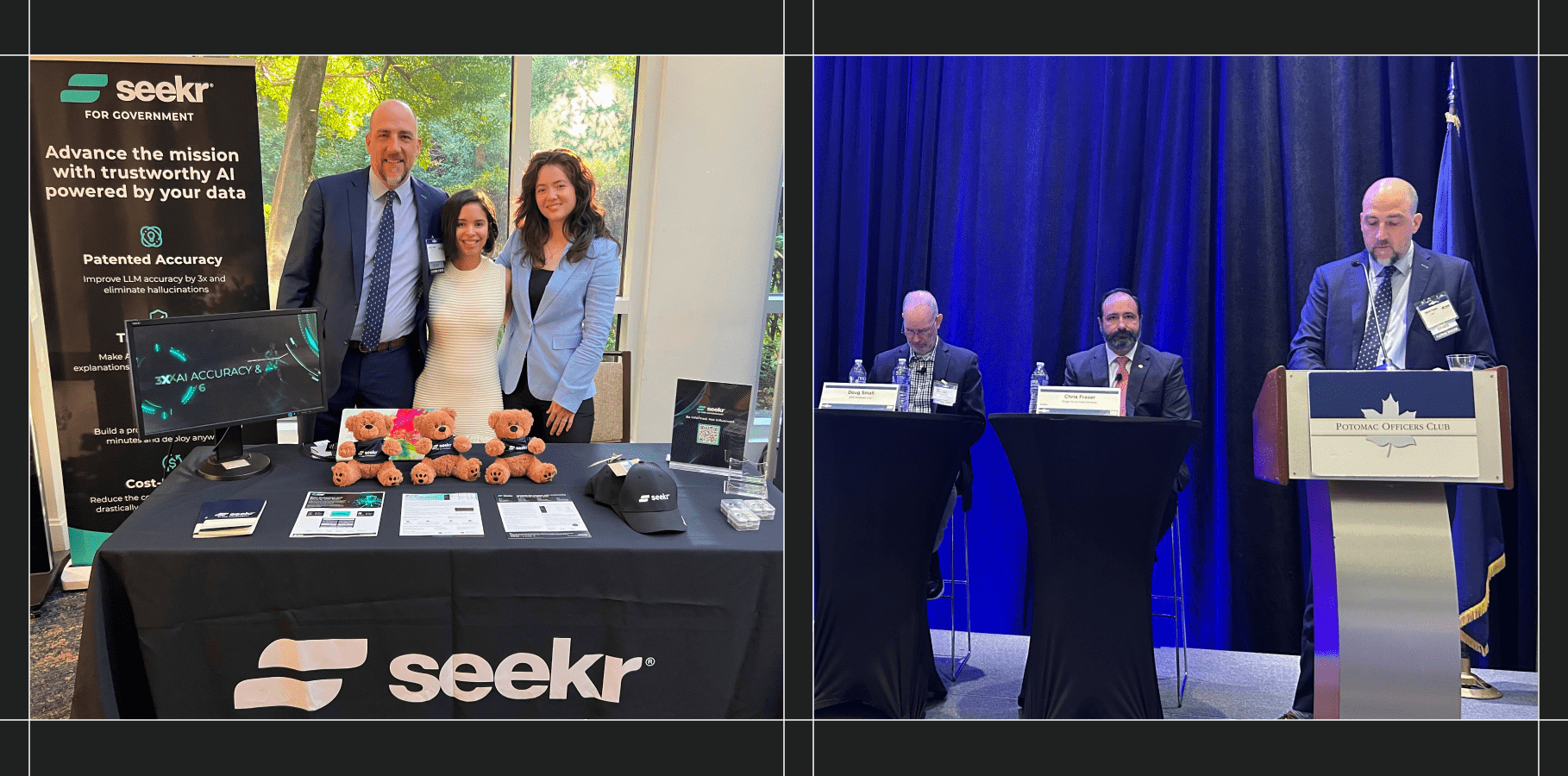

At the 2025 Potomac Officers Club Navy Summit, Seekr’s own Mark Fedeli, Director of Business Development, took the stage as moderator for one of the most talked-about discussions of the day: Optimizing Navy Decision Making with Generative AI—Balancing Human Insight with AI Precision.

The panel brought together four heavy-hitters in defense technology and strategy:

- Justin Fanelli, Chief Technology Officer, Department of the Navy

- Stuart Wagner, Chief Data & AI Officer, Department of the Navy

- Rear Adm. (ret.) Doug Small, President, DWS Strategies, and former leader of Project Overmatch

- Chris Fraser, Senior Vice President, Exiger, a leading AI-driven delivery and implementation expert

Together, these thought leaders explored how generative AI (GenAI) and agentic AI systems can transform decision-making speed, sharpen the Navy’s edge against adversaries, and accelerate adoption of commercial AI.

Setting the stage: The human + machine equation

The right paradigm to frame AI is human–machine teaming: AI can’t replace warfighters, but it can radically reshape how humans interact with data and make decisions. Fedeli challenged panelists to share not only what excites them about AI, but also the practical roadblocks slowing adoption.

Panelists spoke to a core paradox: GenAI has dazzled technologists and the public, but it hasn’t yet moved the productivity needle at scale. Often, the challenge is translating small gains into mission-level outcomes. AI must create measurable efficiencies that free resources to reinvest and accelerate capability development.

Speed as the new high ground

Panelists highlighted lessons learned from Ukraine, where the U.S. has observed measure–countermeasure cycles within 24 hours. This is a pace only achievable through rapid learning from operational data. He argued that the Navy must adopt the same approach: automatically collect, classify, and leverage data to update systems in near-real time.

But there’s a catch: today’s Authority to Operate (ATO) processes stall innovation. The Navy wants to bring in “untrusted” commercial software, calibrate it with data, and learn rapidly—but the environment doesn’t allow it yet. Ideally, the Navy would build a pipeline where commercial software moves through dev, test, staging, and production at the speed of need, not bureaucracy.

The AI adoption bottleneck

The panel echoed a key theme: The Navy does not have an innovation problem; it has an adoption problem.

Despite the hype cycle, most sailors and officers can’t use GenAI tools like ChatGPT on DoD networks. That gap reflects a fundamental mistrust of operators’ ability to safely integrate new tools. The panel agreed the solution is to trust the people closest to the fight, give them the right tools, streamline workflows, and shrink the time from PowerPoint briefings to executable orders. Once that foundation is in place, more advanced AI capabilities—like war-gaming or predictive analytics—can plug in, seamlessly.

What’s next: Agentic AI

The panel pushed the conversation forward by noting that today’s LLMs are just the beginning. The real frontier is agentic AI systems that can take goals and autonomously generate sub-decisions, code, or operational recommendations.

The panel cautioned that America’s adversaries are already weaponizing GenAI. Advanced persistent threats can use LLMs to accelerate reconnaissance, build spear-phishing campaigns, and exploit software supply chains at machine speed. That asymmetry means the Navy must invest aggressively in defensive and offensive AI capabilities to avoid being outpaced. Overmatch is the goal.

At the same time, there are positive examples of the Navy building an AI agent over a weekend that automated repetitive workflows and deployed in 48 hours. It is proof that sailors and marines can move quickly, if given the right tools.

Prove mission impact with data

Throughout the session, the panel returned to a consistent message for industry: bring proof, not promises, and focus on the impact on the warfighter.

Government buyers are flooded with vendor pitches, but quantifiable outcomes help technology stand out. For example, benchmarks that show tasks done 5x, 10x, or even 30x faster, paired with compelling user stories.

Data is more powerful than stories when it comes to the Navy. The Navy has already seen early examples in hackathons and pilot programs where GenAI compressed workflows by factors of seven. Scaling those wins is the next mission.

Takeaways for industry and DoD

The panel closed with a proposed challenge to both sides:

For industry: Show up with irrefutable metrics and offers the Navy can’t refuse. Don’t just say “better”, quantify it.

For government: Shift from fearing risk to managing it. ATO processes must evolve to continuous, pipeline-driven accreditation so innovation can flow faster.

For both: Remember that adoption, not innovation, is the decisive bottleneck.

In a world where adversaries are already experimenting with agentic AI, the U.S. Navy cannot afford to lag and be outpaced.

Explore more resources

View all resources

Accelerate your path to AI impact

Book a consultation with an AI expert. We’re here to help you speed up your time to AI ROI.

Request a demo