With Seekr, explainability is built in

Validate outputs, debug behavior, and track performance with full explainability and observability across workflows.

Evidence behind every output

Link responses to training data, monitor agent behavior, and review model confidence to ensure outputs can be trusted.

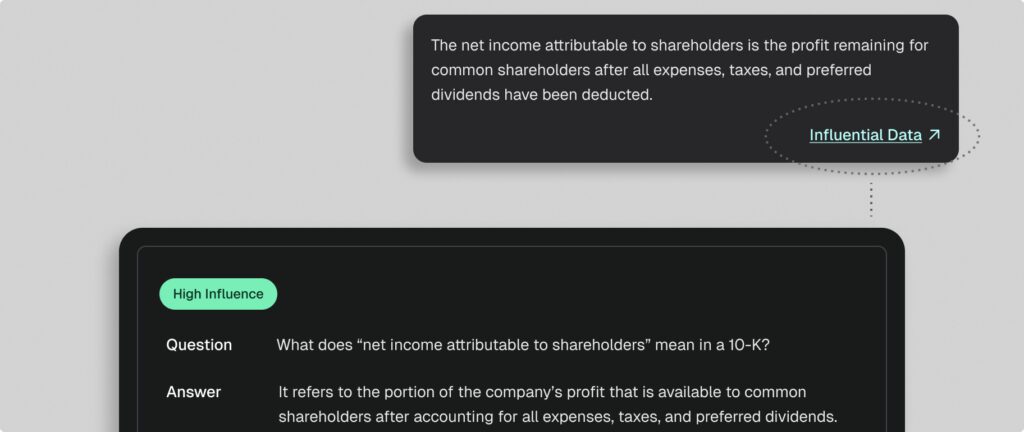

Trace model responses

SeekrFlow surfaces the Q&A pairs from your fine-tuning dataset that most influenced each response, giving teams clear attribution for model behavior. Each influential pair is evaluated by an internal LLM and scored as High, Medium, or Low impact—irrelevant examples are filtered out. Users can click directly through to the original document chunk that generated the training pair, ensuring data provenance and auditability. With this transparency, teams can validate responses, debug unexpected results, and identify areas where training data needs refinement.

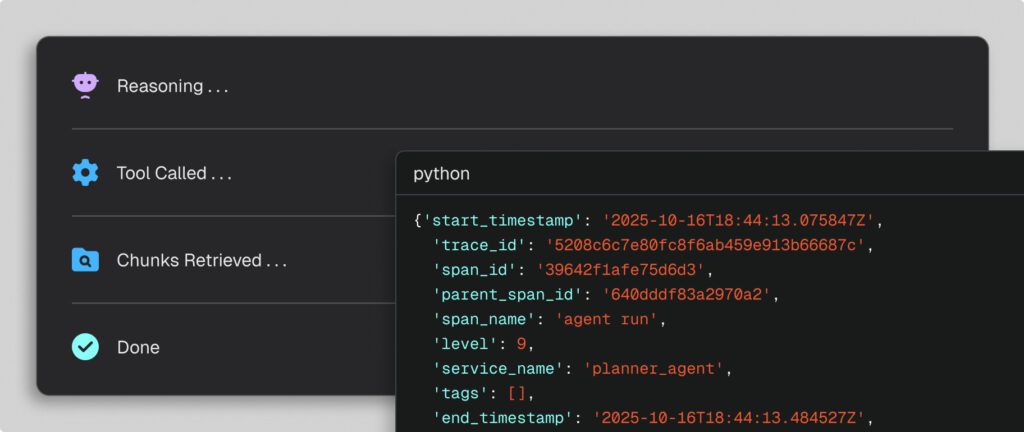

Gain full agent visibility

The observability platform gives complete visibility into how agents reason and act, from input to final output. Each run is captured as a full trace, logging every model call, tool invocation, and internal decision step. Users can drill into span-level metadata including parameters, results, and timestamps for precise debugging and latency analysis. With zero configuration required, observability works out of the box and supports grouping, filtering, and run management across API, and SDK. This transparency accelerates iteration, reduces risk, and builds confidence in agent deployments.

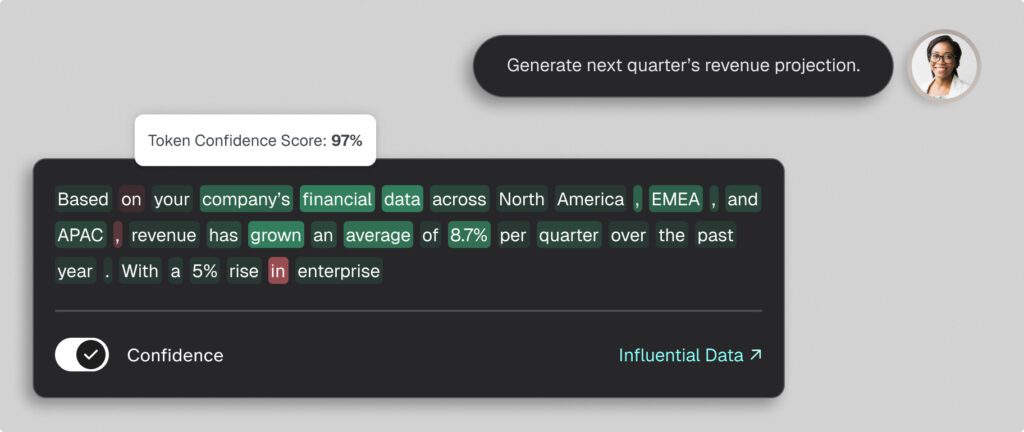

Validate with confidence

Every response in SeekrFlow is accompanied by confidence scores and input parameter visibility, giving teams deeper context for evaluation. Confidence scores highlight the likelihood that a model’s output is accurate or aligned with expectations, while input parameters show exactly what data shaped the response. Together, these tools help users interpret results, flag anomalies, and assess reliability before deploying outputs into production workflows.

Secure by design

With Seekr, your data remains yours. We never use it to train other models and give you full control to install our platform wherever your data resides. Our SOC 2 Type II certification ensures best-in-class security, featuring fine-grained access controls and the flexibility to run on your preferred cloud or hardware.

Learn more

more accurate model responses

more relevance in responses

faster data preparation vs others

cheaper costs vs traditional approaches

minutes or less to build a production-grade LLM

Top enterprise and government leaders trust Seekr

“Seekr is setting a high bar for performant and efficient end-to-end AI development with its SeekrFlow platform, powered by AMD Instinct MI300X GPUs. We’re proud to work with Seekr as they showcase what’s possible using AMD Instinct GPUs on OCI’s AI enterprise infrastructure.”

Nagin Oliver

CVP, Business Development – AI and Cloud at AMD

FAQs

How does QA pair trace work?

After inference, SeekrFlow shows the Q&A pairs that most influenced the output, scored by impact and linked to source data.

How are influence scores assigned?

An internal evaluator LLM scores each Q&A pair as High, Medium, Low, or Irrelevant, surfacing only the meaningful ones.

Why is explainability important?

Explainable AI builds trust by showing which data shaped outputs, helping teams validate, debug, and correct model behavior.

What does the Observability Platform show?

It captures full traces of every agent run, including reasoning steps, tool calls, and outputs for end-to-end visibility.

What is span-level debugging?

Each step in a run is logged as a span, complete with metadata like inputs, outputs, and timing, enabling precise debugging.

Do I need to configure observability?

No. It works right out of the box—every supported agent operation is automatically tracked without extra setup.

Accelerate your path to AI impact

Book a consultation with an AI expert. We’re here to help you speed up your time to AI ROI.

Request a demo