Back to Blogs

Why Inference-First Infrastructure is Reshaping AI Deployment

Spoiler: The most expensive part of AI isn’t training anymore.

At AMD’s Advancing AI 2025 event, every benchmark and roadmap slide focused on tokens-per-second and watts-per-query. Training breakthroughs still grab headlines, but on the P&L, inference is already the cost center that never sleeps.

If you’re deploying multi-agent systems or retrieval-augmented generation (RAG) pipelines, inference speed isn’t just a bonus—it’s essential. Fast, low-latency responses unlock faster decisions and scalable performance.

2024–25 data points:

McKinsey estimates that 70 % of new data-center demand by 2030 will be “AI-ready capacity”, driven mainly by inference, not training.

(translation: your CFO already knows)

AMD’s MI355X preview shows 3–5× tokens-per-second vs. its own MI300X generation, underscoring where silicon R&D dollars are going.

(chips don’t lie)

The average cost per million LLM tokens dropped 1000× from 2021-24 (“LLMflation”), turning inference economics into a board-level KPI.

(token bills got a haircut)

But speed alone isn’t enough. Enterprise teams also need control. That means platforms that are modular, open, and hardware-agnostic, so they can move quickly without getting boxed in.

Biggest model? Yawn. Fastest to production? Now we’re talking.

Why enterprises are breaking up with monoliths

Closed stacks slow-roll experiments and torch budgets. Teams need the freedom to test different models, adapt to evolving workloads, and deploy across environments without starting from zero each time.

And they want to do it without relying on one vendor, one model, or one tightly coupled toolchain.

Modularity and openness provide that freedom. So does infrastructure that works across hardware providers. This is where Seekr and AMD align.

SeekrFlow was built for this moment

- Hardware-agnostic orchestration – run on AMD today, swap in something else tomorrow.

- Full-stack abstraction, not just model serving – data prep → fine-tune → serve → measure, all in one pane of glass.

- Trust & compliance baked in – guardrails, explainability, SOC 2 Type I; no bolt-on MLOps circus.

- Open-model choice, zero hostage vibes – fine-tune open-source models or bring your own without vendor lock-in.

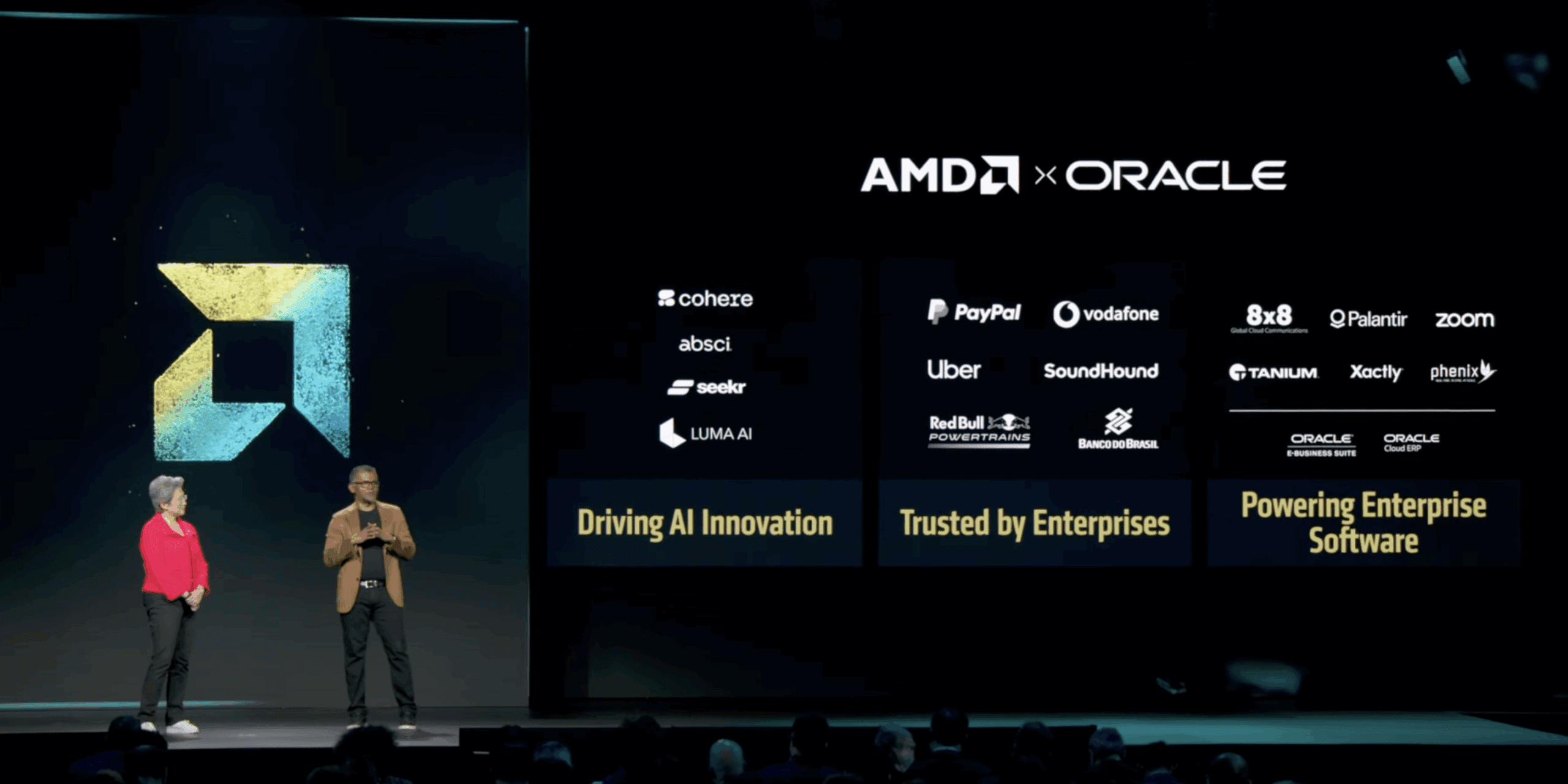

Seekr + Oracle + AMD: AI that’s ready to run

Our end-to-end platform was built to orchestrate multi-agent systems, RAG pipelines, and other real-world AI workflows with accuracy and explainability. At Advancing AI 2025, AMD spotlighted Seekr as a key partner powering inference-first AI on their GPU stack.

Now running on Oracle Cloud Infrastructure (OCI), SeekrFlow gives enterprises a trusted way to deploy AI workloads on scalable, enterprise-grade infrastructure without compromising flexibility or speed.

Inference is where AI’s bold promises meet reality—SLAs, budgets, the whole shebang. With AMD muscle, OCI runway, and SeekrFlow in the cockpit, every millisecond counts—so does every cent.

Explore more resources

View all resources

Accelerate your path to AI impact

Book a consultation with an AI expert. We’re here to help you speed up your time to AI ROI.

Request a demo